January 21, 2026

Artificial Intelligence and the Crisis of Original Thought

Artificial intelligence is not a future concept: Technology has quietly crossed a threshold. It’s no longer just assisting human life, but increasingly shaping how we think, determine truths, form opinions, and understand the world around us.

AI is here. It’s woven into search engines, writing tools, design software, customer service, navigation, and decision-making systems. For the first time in history, we have tools that can generate language, ideas, and answers at a scale and speed far above human output.

At its best, AI is an excellent tool. It can accelerate research, find patterns, reduce busywork, and help people by organizing information. But there’s a less discussed reality unfolding alongside these benefits, one that has less to do with technology and more to do with how humans relate to it.

We are not just using AI to assist thought. Increasingly, we are using it to replace thought.

The Outsourcing of Thinking

Today, many people now turn to AI as a surrogate mind. Instead of wrestling with an idea, sitting in uncertainty, or forming a rough first draft to work through, they ask a machine to produce a finished and formulated answer.

Over time, this will change how the human mind behaves (and it probably already has). Think about the significance of it: it's the human mind. The ONE thing that is truly ours. The place where authentic creativity comes from.

Critical thinking is not just about finding facts the quickest. It’s more about tolerance for friction. Original thought requires discomfort and even disagreement. Making mistakes, revising assumptions, and learning through error are all a part of the process. When answers arrive instantly, cleanly packaged and confidently worded, the muscle of discernment weakens.

The danger isn’t that AI necessarily gives wrong answers (although it does that sometimes, too). The danger is that it gives just believable enough ones. Answers that have users saying to themselves “that sounds right. It must be true”.

At this point, it all becomes consumption. Understanding becomes obsolete. Something that feels correct is good enough and more important than something that has been tested through personal experience.

This is how personal discernment quietly degrades: not through choosing to be ignorant, but through convenience.

Artificial Intelligence: From Assistance to Authority

As AI-generated content floods the internet, it’s going beyond just answering questions. It increasingly defines what information looks like.

Articles, headlines, summaries, and more, are now routinely written or heavily shaped by AI. Search engines. Social platforms. Even newspapers. Content written with artificial intelligence becomes the dominant voice of what we know (or really, what we think we know).

At this point, the boundary between source and synthesis is blurry.

What was once a human observation becomes a machine-generated abstraction of a machine-generated abstraction. The original truths, the lived experience, the firsthand reporting, the messy nuance of it all, gets smoothed out and reduced to nothing.

This is where a deeper and more abstract problematic situation emerges.

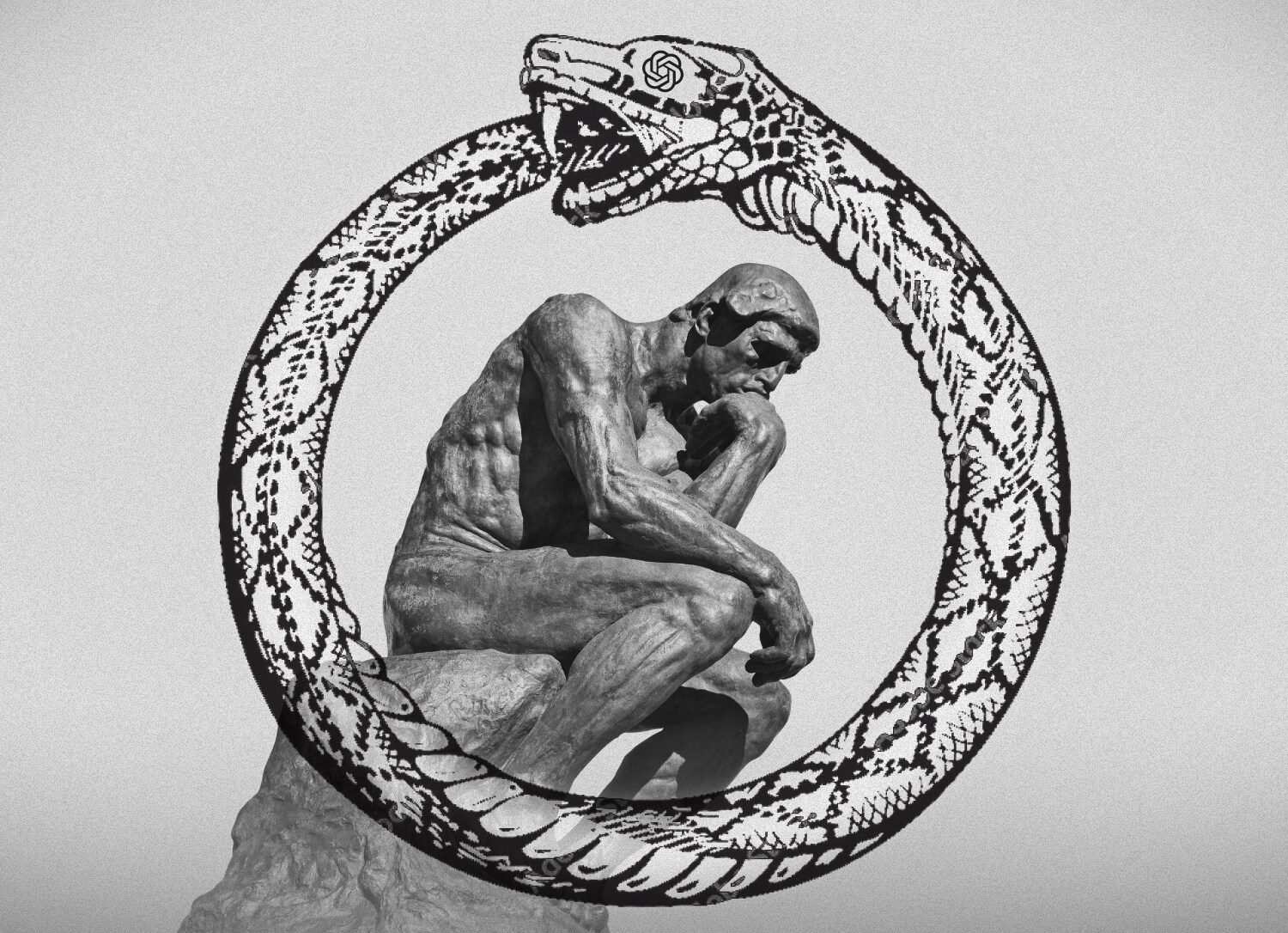

The Snake Eating Its Own Tail

Imagine a library that once held only handwritten books, each written by someone who witnessed something, studied something, or thought deeply about a question.

Now imagine a reading robot (AI) appears. It’s intelligent, endlessly productive, and reads incredibly fast. It doesn’t observe the world directly, it knows nothing on its own. Instead, it reads the existing books and just sythesizes the information into new books.

At first, this seems harmless. Helpful, even.

But soon, these new books begin to outnumber the originals. They get copied more often because they’re more concise and more digestible. The librarian (Google) even starts recommending them over the original books.

Eventually, the library fills with books written by machines that learned from other machine-written books. At that point, the system begins to eat its own tail.

Substance is replaced by summaries, diversity of thought collapses into statistical averages. The library still looks full, but it’s no longer grounded in reality. It's a copy of a copy of a copy of a copy of an original.

This is the risk of learning based on AI-shaped information. It's a slow hollowing-out of truth over time.

The snake doesn’t strike. It circles. And by the time it realizes it’s consuming itself, the original nourishment is gone.

Why Does it Matter?

Truth requires an external anchor, or something outside the system. Reality. Observation. Accountability. Humans provide that anchor because they live in the world and bear responsibility for what they claim. AI does not.

When this AI-generated content begins to cite AI-derived sources, authority becomes circular. When search engines and models reinforce each other’s outputs, confidence replaces verification. Knowledge turns inward.

And a society that no longer values contact with origninal truths won't collapse dramatically and all at once. It becomes shallow and hollow slowly.

Ever heard of the frog in the pot of water that slowly warms over time until it becomes a boil and the frog dies? Yeah, that's us.

This is all a Choice, Not an Inevitability

None of this means AI is inherently harmful. The technology is not the villain. The problem is us, handing over our responsibility to think, question, and discern.

AI should never replace our human judgement and discernment.

AI should never replace our original thought and creativity.

AI should never be trusted to make decisions for us.

AI should never become a source of authority.

The future of humanity depends less on how smart our machines become, and more on whether we remain willing to do the work of thinking for ourselves.